The White House announced federal efforts to better understand and harness artificial intelligence for government, though full embrace of the technology is still likely a ways away.

Agencies are often slow to adopt technology, even with frameworks in place, because it is costly, labor intensive and in some ways, intimidating. To find out why, Federal Times spoke with José-Marie Griffiths, a former member of the National Security Commission on Artificial Intelligence and chairman of the Workforce Subcommittee.

Griffiths, now the president of Dakota State University where she works to educate the future workforce on AI, helped shape tech reforms and recommendations to leverage AI for the U.S. national security and defense workforces.

This interview has been edited for clarity and length.

Federal Times: The White House has said it intends to capitalize on AI to contend against national security threats and improve the functioning and efficiency of government. Where are we in this?

José-Marie Griffiths: “The federal government, and probably at state levels to some extent, too, have been slower to move than other institutions. They’ve become large institutions, and it’s hard to move them. And the mechanisms for moving quickly and updating, it’s just very hard.

The military can move a bit faster because they truly have this sort of top-down command structure. They can insist on it, to some extent.

So how do you get pockets of innovators within government? You need to find them. You need to empower them to innovate as they can and to gradually diffuse their innovations out to others.â€

FT: What kind of recommendations were you making with your peers on the National Security Commission on Artificial Intelligence and how could those be taken up quickly?

JMG: “We were not just developing recommendations, but embodying those recommendations in language that could be absorbed directly into pieces of legislation, into existing legislation or even into executive orders. We tried to make it easy for everyone to adopt the recommendations.

Our recommendation at that time was we aren’t moving fast enough. And we perceived [that] into the geopolitical structure, [with] things going on around the world that we can no longer ignore — particularly, let’s face it, China’s ambitions, which China has been very open about and very clear about. In a way, it’s as if we let that creep up on us.

Ultimately, education and communication are going to be key.â€

FT: As agencies look to cyber strategies as their North star, what steps might be easier to tackle first? How can agencies move forward?

JMG: “I think ultimately, the real dilemma is we’ve got whole layers of technology that really haven’t been changed. I mean, they’re still dealing with a fair amount of obsolete technology.

So when you want to do something new, you’ve got this really vulnerable environment. So how much effort are you going to put into protecting the vulnerable, rather than move forward and just replace what you have?

Even when there’ve been recommendations to move forward, they haven’t always been implemented, and it gets bottled down with ‘who’s going to pay for it?’ Agencies work one year at a time, so there’s no continuity in that sense.

If there were a way of saying ‘we’re going to approve a five year plan,’ now we know you’re gonna get funding every year for the next five years, unless something drastically goes wrong or we need the money because of an emergency. We don’t have that long-term view in government.â€

FT: And what about workforce implications? Will humans remain a part of AI? And if they are, how can federal agencies ensure they have the staff support to shepherd this technology in light of hiring challenges?

JMG: “In most applications, we haven’t truly taken the human out of the loop. So that’s a question as we go forward: what’s the role of the human?

We don’t have a large enough workforce in the computing and information sciences generally. We have 750,000 vacancies in the United States alone in cybersecurity positions. And our prediction was there would likely be eventually even more openings for people with artificial intelligence expertise than with cybersecurity expertise. And so we face this huge shortage of people.

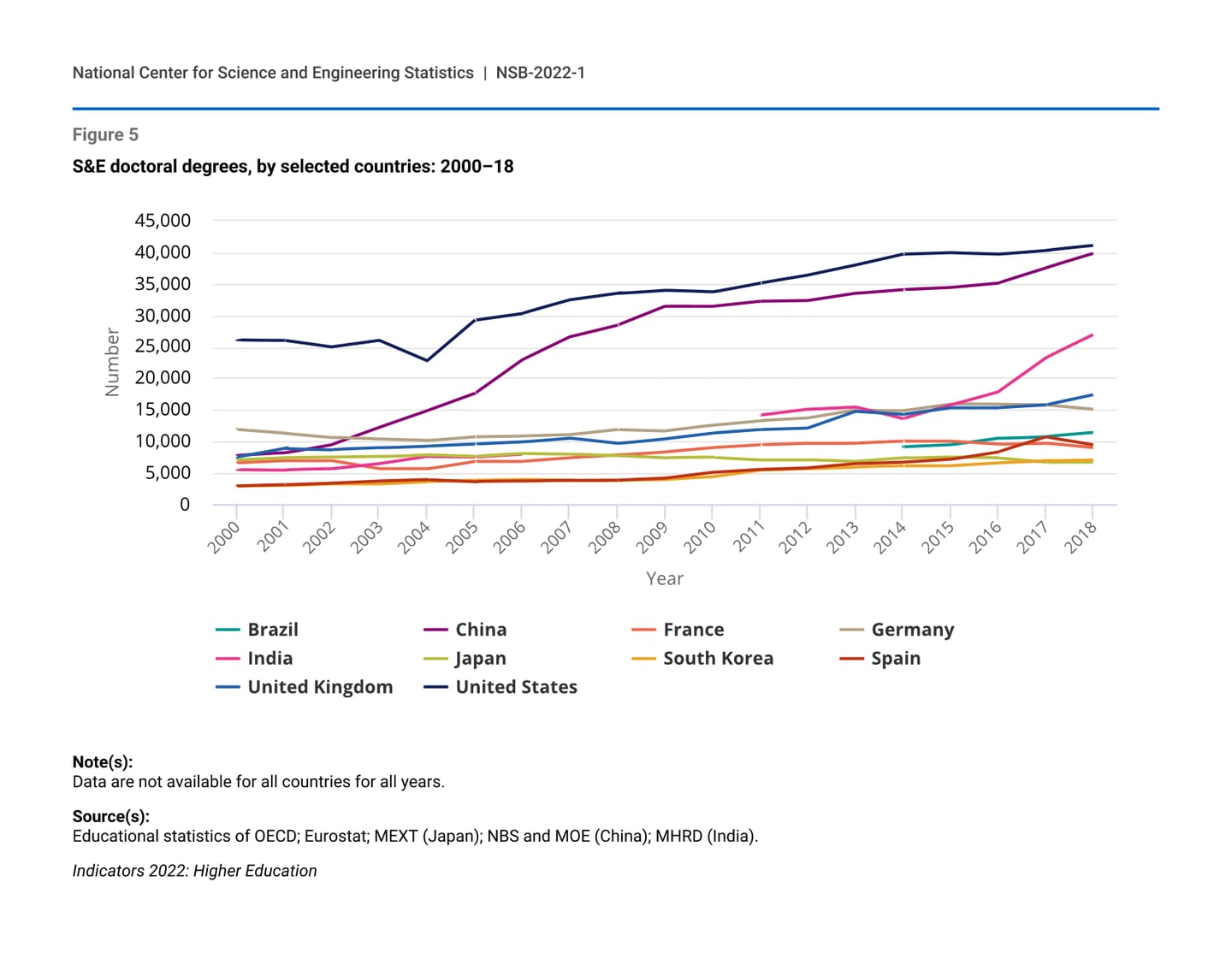

The number of people going into the computing related sciences, it’s going up, but the increases are our international students. And if we want to talk about the diversity of that workforce, we are way back to [late 1990s] levels of women going into those fields. We’ve made a lot of progress over a period of years, and it looks as though we’ve gone all the way back. So we do face some critical issues.â€

FT: So, we’re in a global competition for talent as well as a domestic one.

JMG: “We have to say homegrown talent isn’t going to be enough. And have to have an immigration system that recognizes [international talent]. We can start off with the the increase in foreign students who are getting degrees and perhaps give them longer work permits, get them green cards a little bit sooner. There are ways to import technologists, share capabilities across countries, et cetera, especially the Five Eye countries where we already have intelligence sharing.â€

FT: What will it take for agencies to make up workforce gaps?

JMG: “It has to be a multi-dimensional approach.

We probably need to connect people to government missions.

Internships are great, both for the agencies to learn about people and for people to learn about agencies. The federal government would have to connect, I think, to professors and instructors so that they know more.

Google and Microsoft aren’t going to pick up everyone and we know they let people go, too.

The second area [of recruiting] is people already in the workforce. There are a lot of people in the workforce who’ve got technology-related degrees, so I think up-skilling [and] re-skilling of people already in the workforce is a way to go. It’s not for everyone. But for some people, if they have the aptitude and interest, that could be a way to jumpstart. Take a group of people, get them up to speed, and then have them help you get the next level of people up to speed.

And then the third area is in our K-12 systems. We have a Cyber Academy, now that’s going to high schools in our state. Students will be able to take courses in computer science, cybersecurity, artificial intelligence and come into college with a full year of college, as well as their high school diploma.

We’ve got to get out into the K-12 environment and not scare them off.

Industry is also willing to help government if we can move the technology implementation forward. We talked about a sort of national guard in IT, and reserves in cyber where people who could go and do their duty and help out as needed.

FT: How do agencies sell the mission or tell their story to draw talent in?

JMG: “We talk a lot about the skills we need, but I don’t know that government says too much about why they need them. What would it do for the government, and what it would do for the people that the government serves?

And I don’t think young people have a real idea of what government does. Their interaction with government is pretty limited. And their parents’ interaction is, you know, IRS. We hear about what’s going on in Washington, and it’s not always appealing.â€

FT: How will the federal government struggle to determine the regulatory environment? I mean, can you even regulate something that evolves so fast and can be developed by everyday people in their own homes?

JMG: “I thought it was a great move when open AI evolved. Everybody can do this. It’s just, now, there are so many people doing it. And we have to understand who’s working on AI that doesn’t necessarily have the best interests. You can’t protect against everything, but I think we’re in for a long, long battle.

There are all these sort of lone-wolf players, gangs of people and people who just like to disrupt. And they’re very tech savvy, and they’ve largely taught themselves.

I don’t think we should try to control it totally. But we should say ‘where are the real risks?’â€

Molly Weisner is a staff reporter for Federal Times where she covers labor, policy and contracting pertaining to the government workforce. She made previous stops at USA Today and McClatchy as a digital producer, and worked at The New York Times as a copy editor. Molly majored in journalism at the University of North Carolina at Chapel Hill.