An FBI investigation revealed that Teixeira, a 22-year-old who ran a server on Discord called “Thug Shaker Central,” spent much of his life online, talking primarily with other young men via message, video calls and voice chats. He chatted about guns and military gear, threatened his school, made racist and antisemitic jokes, traded conspiracy theories, discussed antigovernment sentiments, and in a bid to show off, shared some of the military’s most closely guarded secrets about the Russia-Ukraine war and the Middle East.

By the time the young airman was arrested in 2023, media scholar PS Berge had been studying Discord and its users for three years and had created an online consortium of other academic researchers who were doing the same. That an intelligence leak occurred on the site, creating a national security incident, didn’t come as a shock to her.

“My response was, ‘Of course. Of course this would happen on Discord,’” Berge said. “Because on a platform like this, you share everything with your people. Everything about your life. So, why not share national security secrets?”

Teixeira pleaded guilty in March to six counts of willful retention and transmission of national defense information. His sentencing is scheduled for September, and prosecutors are asking that he serve between 11 and 17 years in prison.

The same month Teixeira agreed to a plea deal, the FBI revealed it had investigated another service member in 2022 for leaking information on Discord.

Former Air Force Staff Sgt. Jason Gray, who served as a cyber analyst at Joint Base Elmendorf-Richardson, Alaska, admitted to running a Facebook group for followers of Boogaloo, a loosely organized, antigovernment movement that advocates for a second Civil War. Gray was disgruntled with his military career, and he discussed his dissatisfaction with the U.S. government in several Discord channels created for the Boogaloo movement, according to a 2022 FBI affidavit that was unsealed in March.

Gray, who used the account name LazyAirmen#7460, was accused of posting a classified image in a private Discord channel that he “likely obtained” from his access to National Security Agency intelligence, the affidavit states.

Investigators said the image could’ve been shared “in furtherance of the Boogaloo ideology,” but didn’t elaborate on the image’s details. It’s uncertain whether the FBI is still investigating the potential leak. But while searching Gray’s electronic devices for evidence of an intelligence breach, authorities discovered hundreds of images of child pornography. Gray is currently serving five years in federal prison on multiple child pornography charges.

Oversharing is a hallmark of Discord, an online world where members of certain channels talk all day, every day, and even fall asleep together on voice calls, said Megan Squire, a computer scientist and deputy director for data analytics at the Southern Poverty Law Center.

People who study the platform agree that it’s not inherently bad — it’s used by millions of gamers, students, teachers, professionals, hobbyists and members of the military community to communicate and socialize. However, extremists have hijacked a part of the platform to radicalize and recruit others to their causes, said Jakob Guhl, senior manager for policy and research at the Institute for Strategic Dialogue.

Following the leak of national security secrets and other high-profile, nefarious uses of the platform in recent years, researchers are grappling with what to think of the platform’s small but headline-grabbing dark side, and many disagree on whether Discord as a company is doing enough to root out bad actors.

“It’s always a bit difficult to strike the right tone between not scaring people off the platform, because the majority of users are completely fine, but also highlighting that there is an actual issue of radicalization,” Guhl said. “It’s not the biggest or most offending platform, but it definitely plays a crucial role among this network.”

‘Not inherently evil’

The National Consortium for the Study of Terrorism and Responses to Terrorism, known as START, studied decades of violent extremist attacks and found a military background to be the most commonly shared characteristic among those who committed or plotted mass casualty attacks from 1990 through 2022, more so than criminal histories or mental health problems.

Researchers from START said the study revealed why extremist groups tend to focus recruitment efforts toward people with military service records: Even a small number of them can have an outsized impact inside extremist movements.

While such recruitment occurs on Discord, Guhl, Berge and Squire agreed that the mere presence of service members and veterans on the platform isn’t a cause for concern.

“It’s a popular platform and not inherently evil,” Squire said. “I’d be much more concerned about military folks on 4chan, Telegram, places like that. Nothing good is happening on those platforms, but Discord could be useful.”

In fact, Berge said, it can be a valuable forum for marginalized people to foster a sense of community. On its “about” page, Discord describes its mission as one that helps users find a sense of belonging.

“Discord is about giving people the power to create space to find belonging in their lives,” the company’s mission statement reads. “We want to make it easier for you to talk regularly with the people you care about. We want you to build genuine relationships with your friends and communities close to home or around the world.”

That’s what the veterans group Frost Call is doing on the platform. The nonprofit encourages veterans and service members to stay connected through gaming, one of its founders told Military Times last year. As of June, it boasted 390 members.

“When we founded Frost Call, we built an organization around this idea of bringing veterans together, helping to improve camaraderie that’s missing from military service,” Marine Corps veteran Wesley Sanders said last year. “It serves an enormous mental health need, but also ... an existential need for a lot of veterans.”

Moreover, when new users join Discord, extremist elements of the platform are not easily visible.

Discord is made up of millions of servers centered on various topics. Users can join up to 100 servers, and each server has numerous text, voice and video channels. When a new user creates an account and searches servers to join, the platform will suggest “its most popular, most successful, public-facing communities,” rather than any disquieting, invite-only communities, Berge said.

“If you are a standard user, and if you’re signing in to Discord for your general interests — maybe you’re looking for fellow students or fellow veterans — 90% of the time, you’re not going to accidentally stumble upon an extremist group,” she said. “They actually go through a lot of effort to make these spaces insulated, to make them difficult to find.”

When using Disboard, a third-party search platform for Discord servers, prompts such as “Nazi” or “white supremacist” won’t elicit results like they used to, Berge said. In a 2021 study, she found thousands of Discord servers that marketed themselves on Disboard as hateful and Nazi-affiliated spaces.

“You used to be able to search for those terms and find communities. It was horrifying,” Berge said. “Those servers still exist, but they’ve changed the ways they’re identified, and in some cases, we know that high-profile, toxic communities have been shut down.”

Extremists find a foothold

Founders Jason Citron and Stan Vishnevskiy created Discord in 2015 as a way to allow friends around the world to communicate while playing video games online. Its popularity exploded during the Covid-19 pandemic, when lockdowns went into effect and many people became more isolated than ever before.

Just two years after it launched, Discord gained notoriety as the platform of choice for facilitators of the 2017 “Unite the Right” rally in Charlottesville, Virginia. Organizers, including some veterans, used Discord to share propaganda and coordinate the protest, which turned deadly. James Fields was convicted of killing Heather Heyer when he drove his car into a group of counterprotesters. Fields had joined the Army in 2015 but was separated quickly because of a cited lack of motivation and failure to train.

In 2022, Discord made headlines again after a mass shooting at an Independence Day parade in Highland Park, Illinois, where seven people were killed and dozens more injured. The suspected shooter ran his own Discord server called “SS,” where he complained about “commies,” short for “communists,” according to posts archived by the nonprofit website Unicorn Riot.

That same year, an 18-year-old white gunman killed 10 Black people at a supermarket in Buffalo, New York. The gunman, Payton Gendron, spent months writing plans for the attack in a diary he kept on a private Discord server, visible only to him. About 30 minutes before the attack, Gendron sent out invitations for others to view the diary, and 15 people accessed it, according to Discord.

The platform again faced scrutiny following Teixeira’s leak of national security secrets.

“It’s periodic. Every couple of years, it seems like there’s something,” Squire said. “There are other platforms that are worse, but Discord keeps coming up over and over again.”

Research institutions such as the Institute for Strategic Dialogue found that Discord serves as a hub for socializing and community-building across far-right groups, including Catholic extremists, the white supremacist Atomwaffen Division and the antigovernment Boogaloo movement.

Extremist groups value the platform’s layers of privacy and anonymity, as well as its chat and video functions and collaborative nature, Guhl said. Berge described it as a walled garden, or an online environment where user access to content can be controlled. Servers come with the capability to assign hierarchy to different members and allow some members to access information that others can’t, the researchers said.

“In, say, a Twitter direct-messaging thread or Facebook DM, you don’t really have levels and hierarchies,” Squire said. “Discord really allows you to have more fine-grained ranking structures.”

Another reason for the prevalence of extremists on the platform stems from its roots in gaming, Guhl surmised.

Rachel Kowert, a globally recognized researcher on gaming and mental health, has spent five years researching extremism in video game communities. Though gaming itself is a powerful tool for connection and growth, extreme and hateful ideologies are now commonplace in those spaces, Kowert said.

“If you’re spending a lot of time in the social or gaming spaces where misogyny is commonplace, that can in turn start to internalize in the way you see the world and interact in it,” Kowert said.

Fighting a dark legacy

The existence of far-right groups on Discord — and the high-profile instances of extremism on the platform in the past several years — has spawned its “extremist legacy,” one from which it’s now trying hard to distance itself, said Berge.

Discord said it removed more than 2,000 far-right-affiliated servers following the “Unite the Right” rally. After the Buffalo killings, it removed Gendron’s server and worked to prevent the spread of content related to the attack, the company said. At that point, Discord agreed it “must do more to remove hate and violent extremism.”

“We created Discord to be a place for people to find belonging, and hate and violence are in direct opposition to our mission,” the company said in a statement at the time. “We take our commitment to these principles seriously and will continue to invest in and deploy resources.”

Earlier this year, the company reported that 15% of its staff works on its user safety team, which cracks down on harassment, hateful conduct, inappropriate contact, violent and abusive imagery, violent extremism, misinformation, spam, fraud, scams and other illegal behavior.

During the investigations into Teixeira and Jason Gray, Discord officials immediately cooperated with law enforcement, a company spokesperson told Military Times. And in recent months, Discord has leaned on machine-learning technology to moderate content.

“We expressly prohibit using Discord for illegal activity, which includes the unauthorized disclosure of classified documents,” the spokesperson said.

The company publishes reports each quarter showing actions taken against various accounts and servers. The latest report, published in January, says Discord disabled 6,109 accounts and removed 627 servers that espoused violent extremism during the last few months of 2023.

Squire and Guhl agreed that Discord is “pretty good” at responding to extremist content. Guhl credited the company for including extremism and hate speech in its community guidelines, as well as for deleting servers on a regular basis that breach its terms of service. Discord also created a channel where Squire could flag questionable content on the platform, and the company has been receptive to the concerns she’s raised, she said.

“I credit where credit is due, and I have to give them credit for that,” Squire said. “I think it’s taken seriously, and there are other platforms that I could not say that about.”

Extremists are ‘absolutely still there’

Berge applauded Discord for ramping up the technology behind its moderation and for introducing IP bans, which restrict a device from accessing the platform, rather than just an account. Still, she sees room for improvement.

Discord should place more emphasis on educating moderators and users about how to recognize when someone is being radicalized and pulled into an extremist space, Berge said. She also criticized the platform for disbanding a program in 2023 that included hundreds of volunteer moderators.

“It wasn’t Discord’s automated flagging systems that caught national security secrets being leaked by Jack Teixeira. It took other users and community moderators digging into it and someone finally reporting it,” Berge said. “Elevating people and giving them tools to moderate is absolutely central to protecting the platform, and that’s one area where I think they’re taking a step back.”

Berge is still researching communities on Discord, four years after she first uncovered a network of white supremacists using the platform as a recruitment ground. Despite its community guidelines and efforts to remove offending servers and accounts, Discord still serves as a meeting place for pockets of extremism.

“They’re harder to find, but they are absolutely still here. We’re still finding them,” Berge said. “It is still one of the most popular spaces for people to congregate, share and be in community with each other, for better or for worse.”

Discord remains the “platform of choice” for some hate groups, noted Squire, who described the company’s fight against extremists as playing whack-a-mole: As soon as one is removed, another pops up. A lack of institutional knowledge among far-right extremist groups is partly to blame, she said.

“Everybody’s always fresh, and they don’t have any structure for teaching one another and learning from mistakes of the past,” Squire said. “That’s convenient for us, because as we keep amassing knowledge, they make the mistake of reusing the technology that’s most convenient, rather than being strategic.”

This story was produced in partnership with Military Veterans in Journalism. Please send tips to MVJ-Tips@militarytimes.com.

]]>The journalists reported seeing nothing wrong on the flight deck, which was precisely the point of Hill’s invitation. Ike and its crew remained on station, with no hole in the deck.

Two weeks earlier, a spokesman for Yemen’s Houthi rebel movement announced that the rebels had struck the Eisenhower with a barrage of missiles to punish the United States for its support of Israel in its war against Hamas.

On X (formerly Twitter), Houthi supporters shared a video allegedly showing a large crater at the forward end of the Eisenhower’s flight deck. Other accounts posted a different image of a fiery blast aboard the ship.

The purported evidence of a strike spread quickly across Chinese and Russian social media platforms, thanks in part to the efforts of Russian sites with a reputation in the West for spreading disinformation.

Despite false Houthi claims, the Ike aircraft carrier fights on

The Houthis’ online conjuring of a successful attack on Ike that never happened complements their months-long campaign to disrupt commercial shipping in the Red Sea that has sunk commercial vessels and injured civilian mariners.

And while the U.S. military and allies regularly hit back with airstrikes against Houthi missile launchers and other assets in Yemen, the Pentagon is less prepared to defend against the online lies and disinformation that the Houthis are spreading.

In the instance of the false Ike attack, Capt. Hill took matters into his own hands, leveraging his 86,000 followers on X. The day after the false claims emerged, Hill began to post videos and still images showing normal operations aboard his ship, including a plane landing on the flight deck and trays of muffins and cinnamon buns fresh from the oven in the ship’s bakery.

I infiltrated the bake shop because I smelled something interesting from way down the p-way. pic.twitter.com/8BkWFdI5LU

— Chowdah Hill (@ChowdahHill) May 31, 2024

Meanwhile, independent analysts exposed how the Houthis generated their false evidence of a missile strike on the Eisenhower.

An Israeli analyst demonstrated that the supposed photograph of a crater on the carrier’s flight deck consisted of a stock image of a hole superimposed on an overhead shot of the Eisenhower taken from satellite imagery dated almost a year before the alleged strike.

This is a photoshopped satellite image of the USS Eisenhower docked at Pier 12 at the Naval Station Norfolk in Virginia, taken on April 10th, 2023.

— Tal Hagin (@talhagin) June 4, 2024

Additionally, here is the Shutterstock image used for the "impact". https://t.co/CWagV062Xl pic.twitter.com/eqYsb04WVM

The fictional attack on Ike did not come as a surprise to anyone tracking Houthi disinformation efforts. In an ironic example from March, a Telegram channel and a pro-Houthi website shared an AI-generated image of a burning vessel they identified as the Pinocchio, an actual commercial ship the Houthis had targeted but missed.

The Houthis’ supporters had pulled their supposed evidence from a website that shared free stock images. However, no one from the Pentagon officially debunked this image as the Israeli analyst did for the fake photos of Ike.

In addition to these forgeries, pro-Houthi accounts have posted actual images of commercial vessels in flames, claiming the destruction resulted from Houthi attacks.

Yet in those cases, one image showed a burning ship on the Black Sea while another showed events that took place off the coast of Sri Lanka. Pro-Houthi posters even attempted to portray a blurry photo of a distant volcano as a successful strike on an Israeli ship.

This deluge of deceptively labeled images spread was also met with crickets from the Pentagon.

The U.S. military appears to grasp the need to counter disinformation spread by the Houthis and other regional adversaries. In February, the Joint Maritime Information Center, or JMIC, launched its efforts to provide accurate information to shipping companies about Houthi strikes, both real and imagined.

The JMIC operates under the umbrella of the Combined Maritime Forces – a naval partnership of 44 nations under the command of the top U.S. admiral in the region, who also serves as commander of U.S. 5th Fleet.

This is a start, but the Navy has yet to show that it can debunk false information as quickly as the Houthis post it online.

It is fortunate that an Israeli civilian had the skill and commitment necessary to expose the alleged crater aboard the Eisenhower as a work of photoshopping. He posted his conclusions on X four days after the Houthis publicized the supposed attack. Ideally, the Navy itself should be prepared to debunk such propaganda as soon as it appears.

Standing up this kind of capability should be a priority for the JMIC, which could include such efforts in its existing weekly updates.

It is important to act now before the Houthis’ disinformation apparatus becomes more sophisticated. Already, one of its supporters’ fake images of a burning ship garnered 850,000 views on X.

Moreover, the challenge is not limited to the Red Sea or the Middle East. Military forces in every command should have public affairs and open-source intelligence personnel working together to debunk false and exaggerated claims of enemy success on the battlefield.

Max Lesser is senior analyst on emerging threats at The Foundation for the Defense of Democracies, a non-profit, non-partisan think tank.

]]>A senior Justice Department official raised the concerns in a court filing on Friday that sought to justify keeping the recording under wraps. The Biden administration is seeking to convince a judge to prevent the release of the recording of the president’s interview, which focused on his handling of classified documents.

The admission highlights the impact the AI-manipulated disinformation could have on voting and the limits of the federal government’s ability to combat it.

A conservative group that’s suing to force the release of the recording called the argument a “red herring.”

Mike Howell of the Heritage Foundation accused the Justice Department of trying to protect Biden from potential embarrassment. A transcript of the interview showed the president struggling to recall certain dates and confusing details but showing a deep recall of information at other times.

“They don’t want to release this audio at all,” said Howell, executive director of the group’s oversight project. “They are doing the kitchen sink approach and they are absolutely freaked out they don’t have any good legal argument to stand on.”

The Justice Department declined to comment Monday beyond its filing.

Biden asserted executive privilege last month to prevent the release of the recording of his two-day interview in October with special counsel Robert Hur. The Justice Department has argued witnesses might be less likely to cooperate if they know their interviews might become public. It has also said that Republican efforts to force the audio’s release could make it harder to protect sensitive law enforcement files.

Republican lawmakers are expected to press Attorney General Merrick Garland at a hearing on Tuesday about the department’s efforts to withhold the recording. According to prepared remarks, Garland will tell lawmakers on the House Judiciary Committee that he will “not be intimidated” by Republican efforts to hold him in contempt for blocking their access to the recording.

Sen. Mark Warner, the Democratic chair of the Senate Intelligence Committee, told The Associated Press that he was concerned that the audio might be manipulated by bad actors using AI. Nevertheless, the senator said, it should be made public.

“You’ve got to release the audio,” Warner said, though it would need some “watermarking components, so that if it was altered” journalists and others “could cry foul.”

In a lengthy report, Hur concluded no criminal charges were warranted in his handling of classified documents. His report described the 81-year-old Democrat’s memory as “hazy,” “poor” and having “significant limitations.” It noted that Biden could not recall such milestones as when his son Beau died or when he served as vice president.

Biden’s aides have long been defensive about the president’s age, a trait that has drawn relentless attacks from Donald Trump, the presumptive GOP nominee, and other Republicans. Trump is 77.

The Justice Department’s concerns about deepfakes came in a court papers filed in response to legal action brought under the Freedom of Information Act by a coalition of media outlets and other groups, including the Heritage Foundation and the Citizens for Responsibility and Ethics in Washington.

An attorney for the media coalition, which includes The Associated Press, said Monday that the public has the right to hear the recording and weigh whether the special counsel “accurately described” Biden’s interview.

“The government stands the Freedom of Information Act on its head by telling the Court that the public can’t be trusted with that information,” the attorney, Chuck Tobin, wrote in an email.

Bradley Weinsheimer, an associate deputy attorney general for the Justice Department, acknowledged “malicious actors” could easily utilize unrelated audio recordings of Hur and Biden to create a fake version of the interview.

However, he argued, releasing the actual audio would make it harder for the public to distinguish deepfakes from the real one.

“If the audio recording is released, the public would know the audio recording is available and malicious actors could create an audio deepfake in which a fake voice of President Biden can be programed to say anything that the creator of the deepfake wishes,” Weinsheimer wrote.

Experts in identifying AI-manipulated content said the Justice Department had legitimate concerns in seeking to limit AI’s dangers, but its arguments could have far-reaching consequences.

“If we were to go with this strategy, then it is going to be hard to release any type of content out there, even if it is original,” said Alon Yamin, co-founder of Copyleaks, an AI-content detection service that primarily focuses on text and code.

Nikhel Sus, deputy chief counsel at Citizens for Responsibility and Ethics in Washington, said he has never seen the government raise concerns about AI in litigation over access to government records. He said he suspected such arguments could become more common.

“Knowing how the Department of Justice works, this brief has to get reviewed by several levels of attorneys,” Sus said. “The fact that they put this in a brief signifies that the Department stands behind it as a legal argument, so we can anticipate that we will see the same argument in future cases.”

]]>Chinese actors exploited the unfolding chaos and took to social media, where they shared a conspiracy that the fire was the result of a “meteorological weapon” being tested by the U.S. Department of Defense. According to new analysis from Microsoft Corporation, a worldwide technology company, the Chinese accounts posted photos that were created with generative artificial intelligence, which uses new technology that can create images from written prompts.

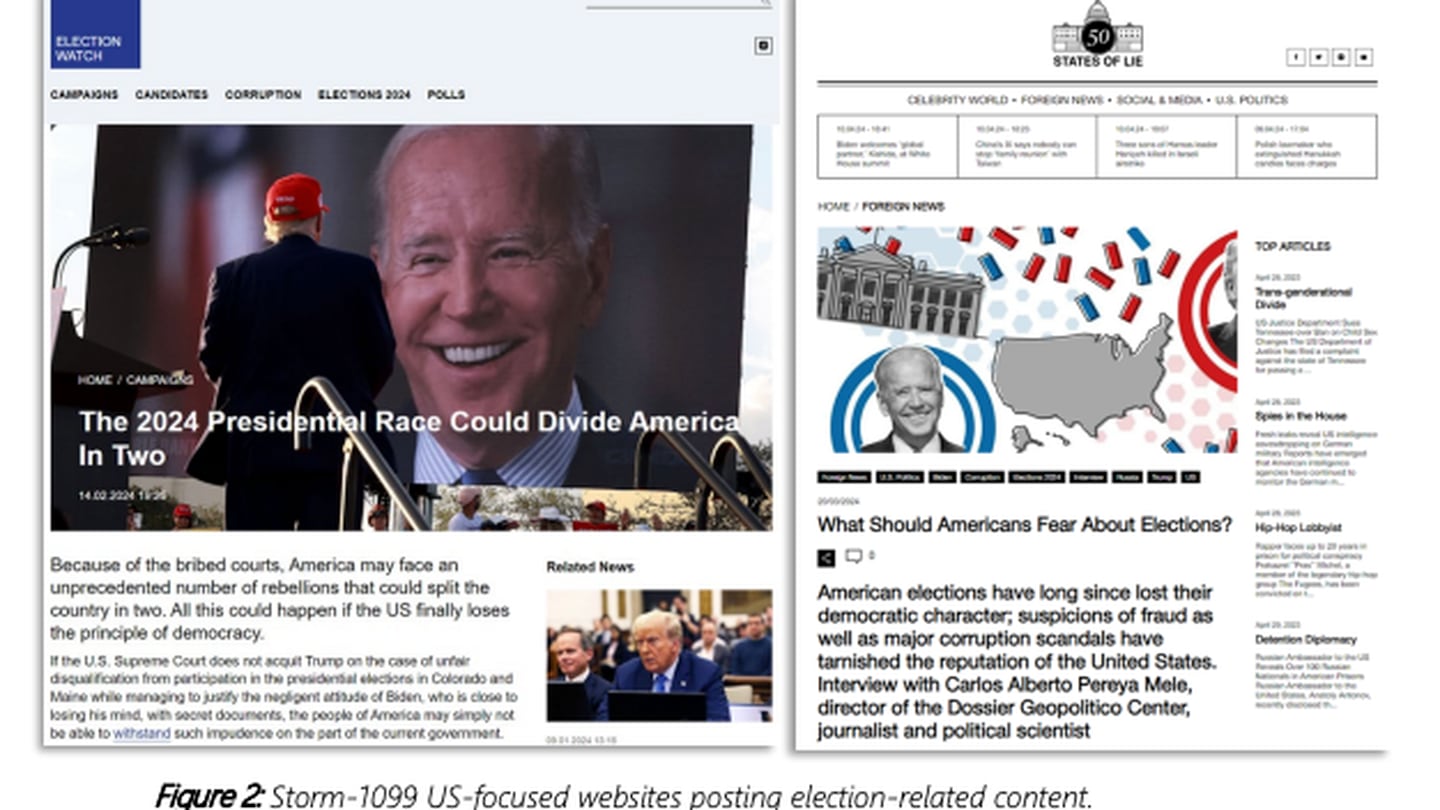

The situation exemplifies two challenges that some experts are warning about ahead of the presidential election in November: The use of generative AI to create fake images and videos, and the emergence of China as an adversary that stands ready and willing to target the United States with disinformation. Academics are also voicing concerns about a proliferation of alternative news platforms, government inaction on the spread of disinformation, worsening social media moderation and increased instances of public figures inciting violence.

An environment rife for disinformation is coinciding with a year during which more than 50 countries are holding high-stakes elections. Simply put, it’s a “very precarious year for democracy,” warned Mollie Saltskog, a research fellow at The Soufan Center, a nonprofit that analyzes global security challenges. Some of the messaging meant to sow division is reaching veterans by preying on their sense of duty to the U.S., some experts warned.

“Conspiracy theories are a threat to vulnerable veterans, and they could drag your loved ones into really dark and dangerous places,” said Jacob Ware, a research fellow at the Council on Foreign Relations who published a book this year about domestic terrorism.

“The seeds are there for this kind of activity again, and we need to make the argument that protecting people from conspiracy theories is in their best interest, not just in the country’s.”

The threat of state-backed disinformation

China kept to the sidelines during the 2016 and 2020 presidential elections, watching as Russia targeted the U.S. with chaos-inducing disinformation, according to the U.S. Intelligence community. But Beijing’s disinformation capabilities have increased in recent years, and the country has proven a willingness to get involved, Saltskog said.

In a report published in April, Microsoft warned that China was preparing to sow division during the presidential campaign by using fake social media accounts to discover what issues divide Americans most.

The accounts claimed that a train derailment in Kentucky was deliberately caused by the U.S. government, and they polled Americans about issues like climate change, border policies and racial tensions. China uses the information gathered to “understand better which U.S. voter demographic supports what issue or position and which topics are the most divisive, ahead of the main phase of the U.S. presidential election,” the technology behemoth warned.

“The primary concern today, in our assessment, is Russia, China and Iran, in that order,” Saltskog said. “Specifically, when we talk about China … we’ve seen with the Hawaii wildfire example that they have AI-powered capabilities to produce disinformation campaigns targeting the U.S. It’s certainly very concerning.”

Envoys from China and the U.S. are set to meet this week to discuss the risks of artificial intelligence and how the countries could manage the technology. A senior official in President Joe Biden’s administration told reporters Friday that the agenda does not include election interference, but noted the topic might come up in discussion.

“In previous engagements, we have expressed clear concerns and warnings about any [Peoples Republic of China] activity in this space,” a senior administration official said. “If the conversation goes in a particular direction, then we will certainly continue to express those concerns and warnings about activity in that space.”

Logically, a British tech company that uses artificial intelligence to monitor disinformation around the world, has been tracking Russian-sponsored disinformation for years. Kyle Walter, the company’s head of research, believes Russia is positioned to increase its spread of falsehoods during the run-up to the November election, likely focusing on divisive issues, such as immigration and the U.S. economy.

Russia isn’t seeking to help one candidate over another, Walter added. Rather, it’s trying to sow chaos and encourage Americans to question the validity and integrity of their voting process.

Microsoft’s threat analysis center published another report at the end of April, saying that Russian influence operations to target the November election have already begun. The propaganda and disinformation campaigns are starting at a slower tempo than in 2016 and 2020, but they’re more centralized under the Russian Presidential Administration than in the past, Microsoft officials said.

So far, Russia-affiliated accounts have been focusing on undermining U.S. support for Ukraine, pushing disinformation meant to portray Ukrainian President Volodymyr Zelensky as unethical and incompetent, and arguing that any American aid to Ukraine was directly supporting a corrupt and conspiratorial regime, the report states.

State-backed disinformation campaigns like these have used images of U.S. service members and targeted troops and veterans during the previous two presidential elections.

A study from Oxford University in 2017 found Russian operatives disseminated “junk news” to veterans and service members during the 2016 election. In 2020, Vietnam Veterans of America warned that foreign adversaries were aiming disinformation at veterans and service members at a massive scale, posing a national security threat.

“It’s certainly happened historically, and it’s certainly a threat to be aware of now,” Saltskog said.

A social media ‘hellscape’

In March, the pause in public sightings of Kate Middleton, along with the lack of updates regarding her health following abdominal surgery, created a breeding ground for conspiracy theories.

Scores of memes and rumors spread in an online fervor until Middleton posted a video March 20, in which the Princess of Wales shared the news that she had been diagnosed with an undisclosed type of cancer. Some who shared conspiracies responded with regret.

The situation offered a public example of how conspiracy thinking snowballs on social media platforms, as well as the real harm it can cause, said AJ Bauer, an assistant professor at the University of Alabama, where he studies partisan media and political communications.

“It does reinforce the fact that social media gives us an opportunity to crowdsource and spin conspiracy theories and conspiracy thinking,” Bauer said. “It can be done for a kind of whimsy, or it can be done for harm, and the line between whimsy and harm is a fine one. It can tip from one to the other pretty quickly.”

Social media platforms were blamed during the presidential election seasons in 2016 and 2020 for the influential campaigns that gained traction on their sites. Since then, the situation has only worsened, several experts argued.

What are disinformation and extremism? And why should troops be aware?

Bauer specifically blamed recent changes at X, formerly Twitter, which was taken over in 2022 by billionaire Elon Musk.

Musk has used the platform to endorse at least one antisemitic conspiracy theory, and several large corporations withdrew from the platform after their ads were displayed alongside pro-Nazi content. Bauer described the site as a “hellscape” that is “objectively worse than it was in 2020 and 2016.”

“One really stark difference is that in 2020, you had a lot of big social media platforms like Twitter and Meta trying at least to mitigate disinformation and extremist views because they got a lot of blame for the chaos around the 2016 election,” Bauer said. “Part of what you see going into this election is that those guardrails are down, and we’re going to experience a free-for-all.”

In addition to the changes at X, layoffs last year struck content-moderation teams at Meta, Amazon, and Alphabet (the owner of YouTube), and have led to fears that the platforms would not be able to curb online abuse or remove deceptive disinformation.

“That timing could not be worse,” Saltskog said. “We’re going to have a much thinner bench when it comes to the people inside social media platforms who do this.”

Kurt Braddock, an assistant professor at American University who studies extremist propaganda, argued that social media platforms don’t have financial incentive to moderate divisive or misleading content.

“Their profits are driven by engagement, and engagement is often driven by outrage,” Braddock said.

Because of this, Braddock believes there should be more focus placed on teaching people — especially younger people — how to spot disinformation online.

A study last year by the Center for Countering Digital Hate found that teenagers were more likely than U.S. adults to believe conspiracy theories. Another study by the University of Cambridge found that people ages 18 to 29 were worse than older adults at identifying false headlines, and the more time people spent online recreationally, the less likely they were able to spot misinformation.

The average age of the active-duty military is 28.5, according to a Defense Department demographic profile published in 2023, and new recruits are typically in their early 20s. Because of the young age of the force, Braddock thinks the Defense Department should be involved in teaching news literacy.

The Pentagon and Department of Veterans Affairs did not respond to a request for comment about any efforts to help service members and veterans distinguish accurate information online.

“They’ve grown up in the digital age, and for some it’s been impossible to differentiate what’s real and what’s not real,” Braddock said. “They’ve essentially been thrown to the wolves and don’t have the education to be able to distinguish the two. I think there needs to be a larger effort toward widespread media literacy for young people, especially in populations like the military.”

AI speeds disinformation

Overall, people are getting better at spotting disinformation because of awareness efforts over the past several years, Braddock believes.

However, just as more people were becoming accustomed to identifying false information, the landscape changed, he said. Generative AI gained traction last year, prompting the launch of tools that can create new images and videos, called deepfakes, from written descriptions.

Since Hamas launched a surprise attack on Israel on October 7, these digital tools have been used to create propaganda about the ensuing conflict. Some AI images portray injured or frightened Palestinian children running from air strikes in Gaza, while others depict crowds of people waving Israeli flags and cheering for the Israeli Defense Forces.

“This is a new thing, and most people aren’t prepared to differentiate between what’s real and what’s not,” Braddock said. “We need to stay on top of these different technologies which can be used to reach a large amount of people with propaganda. It only takes one person to do a lot of damage.”

The Soufan Center and Council on Foreign Relations consider AI to be the top concern heading into November. The technology is developing faster than Congress can work to regulate it, and social media companies are likely to struggle to moderate AI-generated content leading up to the election, Ware said.

As Congress grapples with reining in AI, some states are taking the lead and scrambling to pass legislation of their own. New Mexico is the latest to pass a law that will require political campaigns to provide a clear disclaimer when they use AI in their advertisements. California, Texas, Washington, Minnesota and Michigan have passed similar laws.

Ware said that among those working to counter domestic terrorism, AI has been treated like “a can getting kicked down the road” — a known problem that’s been put off repeatedly.

“We knew it was a specter that was out there that would one day really affect this space,” Ware said. “It’s arrived, and we’ve all been caught unprepared.”

The technology can accelerate the speed and scale at which people can spread conspiracy theories that radicalize others into extremist beliefs, Ware added. At The Soufan Center, researchers have found that generative AI can produce high quantities of false content, which the center warned could have “significant offline implications” if it’s used to call for violent action.

“You can create more false information at scale. You can just ask an AI-powered language model, ‘Hey, create X number of false narratives about this topic,’ and it will generate it for you,” Saltskog said. “Just the speed and scale and efficacy of it is very, deeply concerning to us experts working in this field.”

The creation of AI images and videos is even more concerning because people tend to believe what they can see, Saltskog said. She suggested people look at images and videos carefully for telltale signs of digital deception.

The technology is still developing, and it’s not perfect, she said. Some signs of deepfakes could be hands that have too many or too few fingers, blurry spots, the foreground melding into the background and speech not aligning with how the subject’s mouth is moving.

“These are things the human brain catches onto. You’re aware of it and attune to it,” Saltskog said. “Your brain will say, ‘Something is off with this video.’”

As Congress and social media platforms lag to regulate AI and moderate disinformation, Americans have been left to figure it out for themselves, Ware argued. Bauer suggested people do their homework about their source of news, which includes determining who published it, when it was published and what agenda the publisher might have. Saltskog advised people to be wary of anything that elicits a strong emotion because that’s the goal of those pushing propaganda.

Similarly, Ware recommended that if social media users see something that seems unbelievable, it likely is. He suggested they look for other sources providing that same information to help determine if it’s true.

“People are going to take it upon themselves to figure this out, and it’s going to be through digital literacy and having faith in your fellow Americans,” Ware said. “The stories that are trying to anger you or divide you are probably doing so with an angle, as opposed to a pursuit of the truth.”

This story was produced in partnership with Military Veterans in Journalism. Please send tips to MVJ-Tips@militarytimes.com.

]]>The Strategic Support Force, or SSF, was created on Dec. 31, 2015. It existed for a little more than eight years.

After China dissolved the SSF on April 19, it established an Information Support Force, with President Xi Jinping present at its investiture ceremony in Beijing the same day.

Its first commander is Lt. Gen. Bi Yi, a former deputy commander of the SSF. The Information Support Force is directly subordinate to the Central Military Commission, the top political party organ that oversees China’s armed forces.

Senior Col. Wu Qian, a Defense Ministry spokesperson, said the change is part of “building a strong military, and a strategic step to establish a new system of services and arms and improve the modern military force structure.”

He added that the Information Support Force underpins “coordinated development and application of network information systems.” This suggests it is responsible for command and control, information security, and intelligence dissemination.

He also said the move would have “profound and far-reaching significance” on PLA modernization. However, Brendan Mulvaney, the director of the U.S. Air Force’s China Aerospace Studies Institute, told Defense News it’s unlikely to be “as big of a shift as the 2015-2016 reforms,” which overhauled the PLA.

The military considers the information domain as important as the four traditional air, land, sea and space domains.

The PLA now has three nascent arms — the Information Support Force, Cyberspace Force and Aerospace Force. It appears the latter two were existing SSF departments that China renamed.

After the shakeup, the PLA’s new organization features four services and four arms: the existing PLA Army, Navy, Air Force and Rocket Force services, while the three previously mentioned arms sit alongside a fourth, the incumbent Joint Logistics Support Force.

The Cyberspace Force will subsume the responsibilities of the SSF’s former Network Systems Department, whose mandate was offensive and defensive cyber operations.

Indeed, the Defense Ministry described the Cyberspace Force’s role as “reinforcing national cyber border defense, promptly detecting and countering network intrusions and maintaining national cyber sovereignty and information security”.

The Aerospace Force will take on the charge of the SSF’s Space Systems Department, meaning it will supervise space operations and space launches. Wu said the force will “strengthen the capacity to safely enter, exit and openly use space.”

The ministry said that “as circumstances and tasks evolve, we will continue to refine the modern military force structure.”

Xi has repeatedly urged the PLA to do two things: modernize its readiness structure for high-tech combat, and to loyally follow party diktats.

He has now ordered the Information Support Force to “resolutely obey the party’s command and make sure it stays absolutely loyal, pure and reliable.”

]]>Pillar 2 of the agreement focuses on advanced tech the nations can develop and field together. There are eight working groups focused on cyber, quantum, artificial intelligence, electronic warfare, hypersonics, undersea warfare, information sharing, and innovation, each with a list of ideas to quickly test and push to operators.

Leaders told Defense News how this process is playing out in the undersea warfare working group and how they aim to bring new capabilities to the three navies as soon as this year.

Dan Packer, the AUKUS director for the Commander of Naval Submarine Forces who also serves as the U.S. lead for the undersea warfare working group, said April 4 that the group has four lines of effort: a torpedo tube launch and recover capability for a small unmanned underwater vehicle; subsea and seabed warfare capabilities; artificial intelligence; and torpedoes and platform defense.

On the small UUV effort, the U.S. Navy on its own in 2023 conducted successful demonstrations: one called Yellow Moray on the West Coast using HII’s Remus UUV; and another called Rat Trap on the East Coast using an L3Harris-made UUV.

L3Harris’ Integrated Mission Systems president Jon Rambeau told Defense News in March that his team had started with experiments in an office using a hula hoop with flashlights attached, to understand how sensors perceive light and sound. They moved from the office to a lab and eventually into the ocean, with a rig tethered to a barge that allowed the company’s Iver autonomous underwater vehicle to, by trial and error, learn to find its way into a small box that was stationary and then, eventually, moving through the water.

Torpedo tube launch

Rambeau said the UUV hardware is inherently capable of going in and out of the torpedo tube, but there’s a software and machine learning challenge to help the UUV learn to navigate various water conditions and safely find its way back into the submarine’s torpedo tube.

Virginia-class attack submarines can silently shoot torpedoes from their launch tubes without giving away their location. If submarines can also fill their tubes with small UUVs, they’d gain the ability to stealthily expand their reach and surveil a larger area around the boat.

During a panel discussion at the Navy League’s annual Sea Air Space conference on April 8, U.K. Royal Navy Second Sea Lord Vice Adm. Martin Connell told Defense News that his country, too, would accelerate its work on developing this capability. He said the U.K. plans to test it on an Astute-class attack submarine this year, and then based on what worked for the U.K. and the U.S., they’d determine how to scale up the capability.

Packer said this effort will “make UUV operations ubiquitous on any submarine. Today, it takes a drydock shelter. It takes divers. It takes a whole host of Rube Goldberg kinds of things. Once I get torpedo tube launch and recovery, it’s just like launching a torpedo, but they welcome him back in.”

He added that the team agreed not to integrate this capability onto Australia’s Collins-class conventionally powered submarines now, but Australia will gain this capability when it buys the first American Virginia-class attack submarine in 2032.

On subsea and seabed warfare, Packer said all three countries have an obligation to defend their critical undersea infrastructure. He noted the U.K. and Australia had developed ships that could host unmanned systems that can scan the seabed and ensure undersea cables haven’t been tampered with, for example.

Connell said during the panel the British and Australian navies conducted an exercise together in Australia involving seabed warfare. This effort took just six months from concept to trial, he said, adding he hopes the team can continue to develop even greater expeditionary capability through this line of effort.

Packer said a next step would be collectively developing effectors for these seabed warfare unmanned underwater vehicles — “what are the hammers, the saws, the screwdrivers that I need to develop for these UUVs to get effects on the seabed floor, including sensors.”

The primary artificial intelligence effort today involves the three nation’s P-8 anti-submarine warfare airplanes, though it will eventually expand to the submarines themselves.

Packer said the nations created the first secure collaborative development environment, such that they can all contribute terabytes of data collected from P-8 sensors. The alliance, using vendors from all three countries’ industrial bases, is working now to create an artificial intelligence tool that can identify all the biological sources of sounds the P-8s pick up — everything from whales to shrimp — and eliminate those noises from the picture operators see. This will allow operators to focus on man-made sounds and better identify potential enemy submarines.

Packer said the Navy never had the processing power to do something like this before. Now that the secure cloud environment exists, the three countries are moving out as fast as they can to train their AI tools “to detect adversaries from that data … beyond the level of the human operator to do so.”

For now, the collaboration is focused on P-8s, since foreign military sales cases already exist with the U.K. and Australia to facilitate this collaboration.

Connell, without specifying the nature of the AI tool, said the U.K would put an application on its P-8s this year to enhance their onboard acoustic performance.

Packer noted the U.S. is independently using this capability on an attack submarine today using U.S.-only vendors and algorithms, but the AUKUS team plans to eventually share the full automatic target recognition tool with all three countries’ planes, submarines and surface combatants once the right authorities are in place.

And finally, Packer said the fourth line of effort is looking at the collective inventory of torpedoes and considering how to create more capability and capacity. Both the U.S. and U.K. stopped building torpedoes decades ago, and the U.S. around 2016 began trying to restart its industrial base manufacturing capability.

“The issue is that none of us have sufficient ordnance-, torpedo-building capability,” Packer said, but the group is looking at options to modernize British torpedoes and share in-development American long-range torpedoes with the allies — potentially through an arrangement that involves a multinational industrial base.

Vice Adm. Rob Gaucher, the commander of U.S. Naval Submarine Forces, said during the panel discussion that these AUKUS undersea warfare lines of effort closely match his modernization priorities for his own submarine fleet.

Pursuing these aims as part of a coalition, he said, strengthens all three navies.

“The more we do it, and the faster we do it, and the more we get it in the hands of the operators, the better. And then having three sets of operators to do it makes it even better,” Gaucher said.

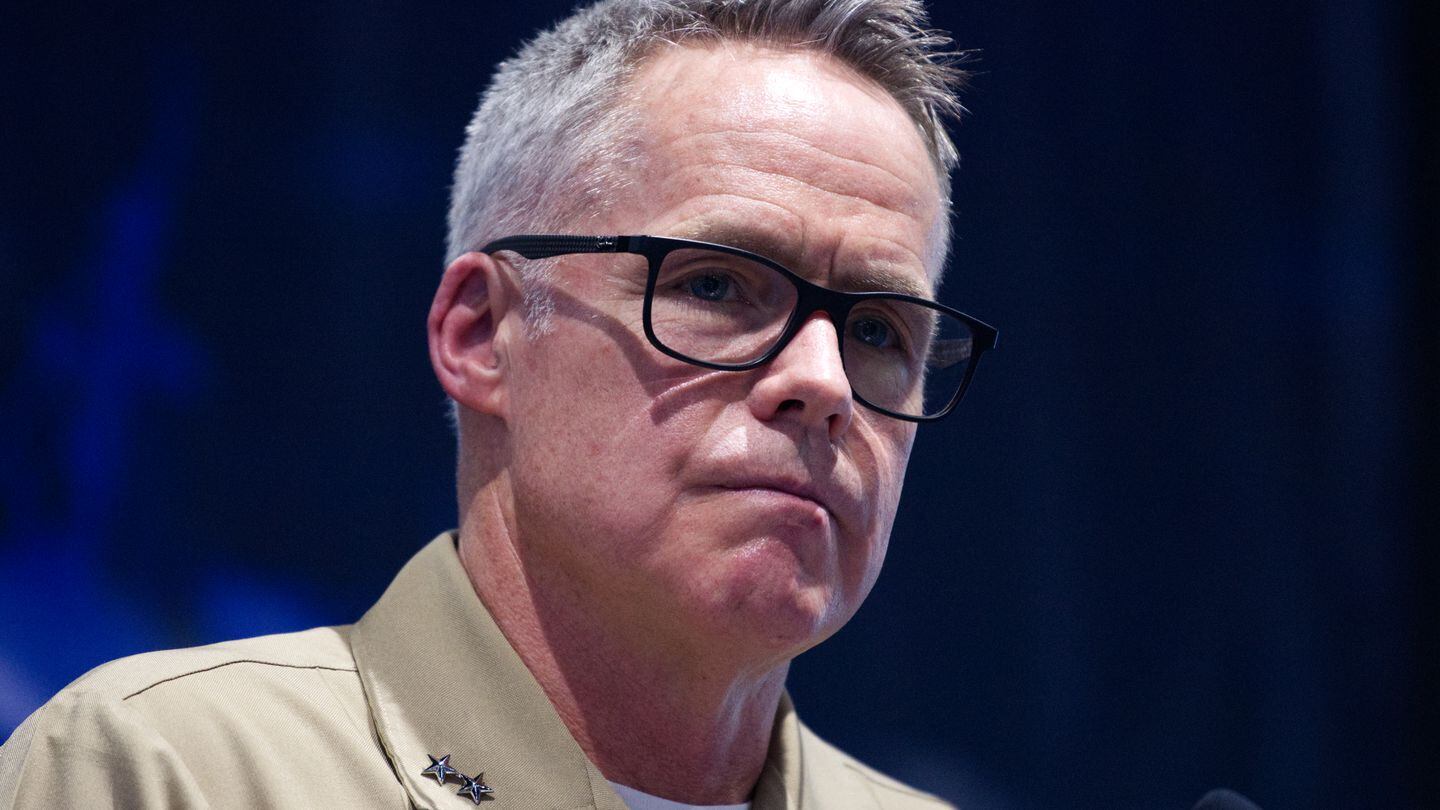

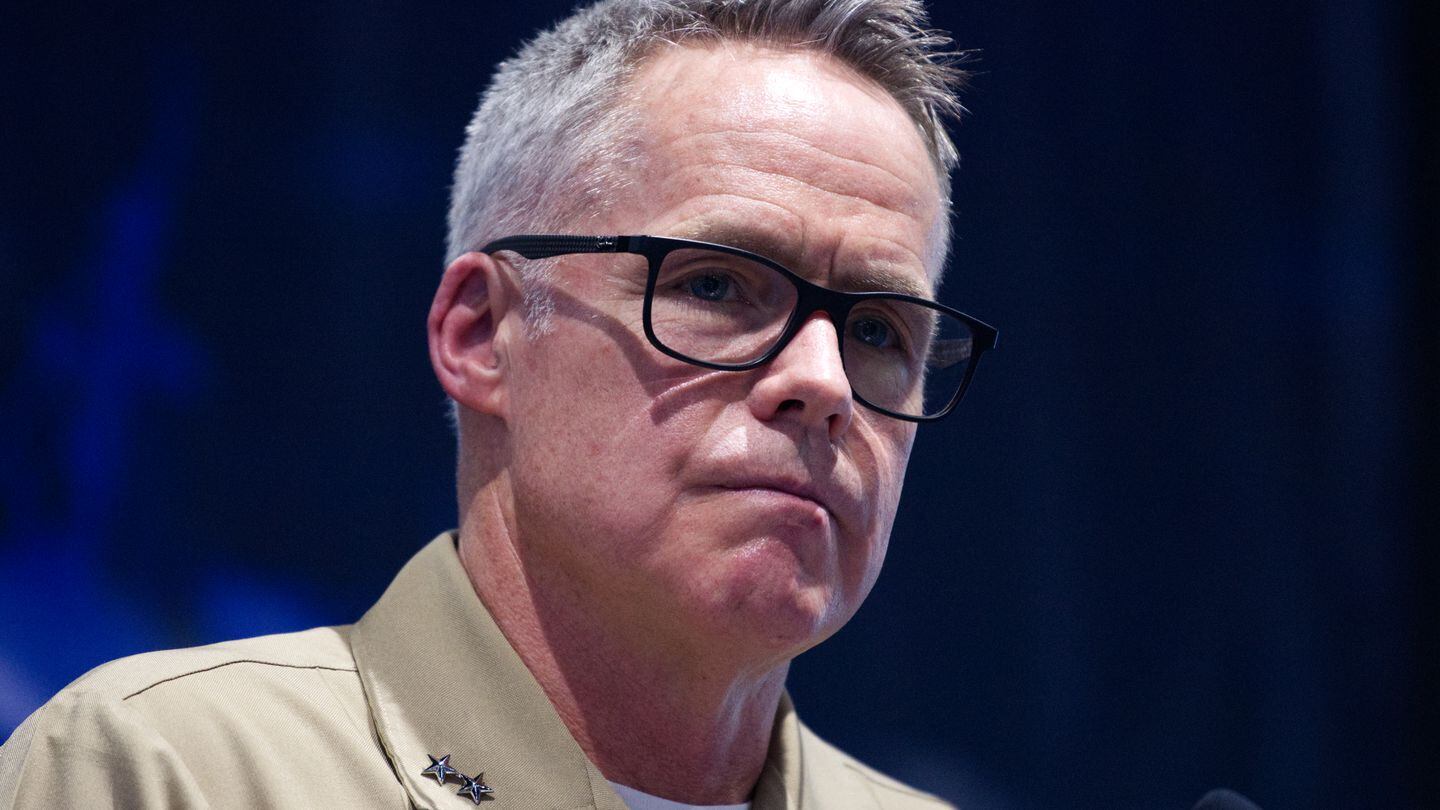

]]>“I can’t say it enough. We are in demand, more in demand than we’ve ever been, and that will continue to increase,” Vice Adm. Kelly Aeschbach, leader of Naval Information Forces, said at the Sea-Air-Space conference going on this week in Maryland, just outside the nation’s capital. “My sense is, in the partnership we’ve had, we will have a persistent and growing presence within submarine crews over the coming years.”

The service in 2022 embedded information warfare specialists aboard subs to examine how their expertise aids underwater operations. A follow-up effort is now on the books, with information professional officers and cryptologic technicians joining two East Coast-based subs: the Delaware and the California.

The trials have so far proven fruitful, according to Aeschbach, who is colloquially known as “IBoss.” That said, staffing and other resources need considering before any sweeping moves are made.

“It’ll be a slow evolution, I think, as we build out that capacity,” Aeschbach said. “Part of that’s just the reality, as we’ve talked about today, of some of the choices we have to make about investment.”

Del Toro asks Navy contractors to consider taxpayers over shareholders

The Navy is also introducing information warfare systems into its live, virtual and constructive environments. The first few, focused on cryptology, meteorology and oceanography, will be uploaded in the fourth quarter of fiscal 2025, service officials have said. Other disciplines include communications, cryptology and electronic warfare, or the ability to use the electromagnetic spectrum to sense, defend and share data.

Tenets of information warfare — situational awareness, assured command-and-control, and the melding of intel and firepower — have enabled U.S. forces to swat down overhead threats in the Red Sea and Gulf of Aden. They have also assisted retaliatory strikes across the Greater Middle East.

Aeschbach cited as an example the USS Carney’s performance. The guided-missile destroyer and its crew spent the past six months intercepting attack drones and missiles launched by Iran-backed Houthi rebels in Yemen.

“Carney had the means to put the right weapon on the target through kinetic means,” Aeschbach said. “She also had the ability to defend herself. And, in all of that, the only part that I tell people is not information warfare is the operator pressing the button to release the missile.”

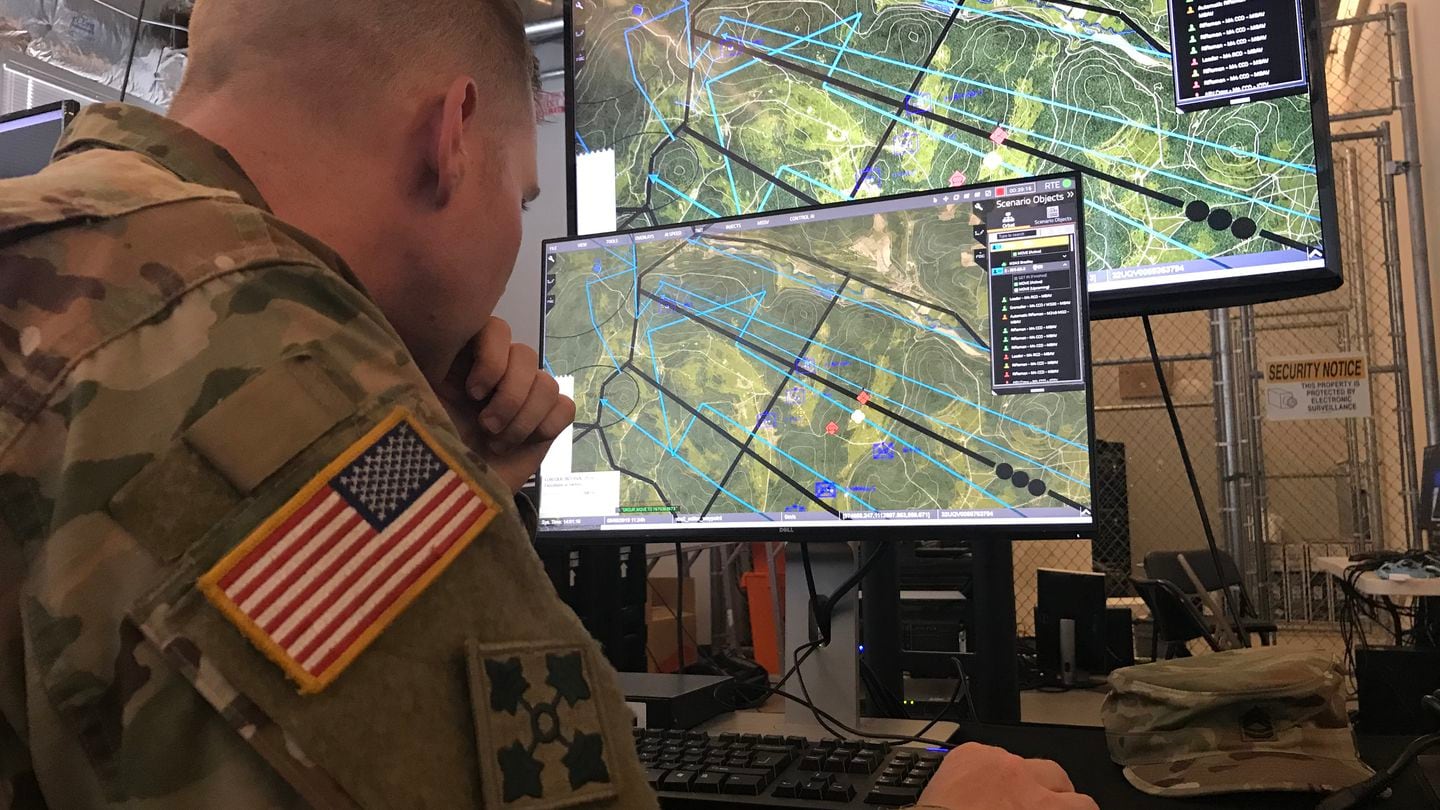

]]>The geospatial intelligence company on March 12 said it won the latest phase of prototype work on the service’s One World Terrain, which compiles realistic and, in some cases, extremely accurate digital maps of territory across the globe for military purposes.

A Maxar spokesperson said the company was “not disclosing any financial details” of the deal. The announcement did not say how long the effort was expected to take. Three phases of the OWT prototype project were previously said to be worth nearly $95 million.

OWT is considered a critical component of the Army’s Synthetic Training Environment. The STE is meant to provide soldiers convincing, common and automatically generated environments in which they can study tactics and rehearse missions. Virtual worlds afford troops additional means of training while also saving real-world ammunition, fuel and wear and tear.

Maxar’s fourth phase of work will focus on delivering enhanced, believable terrain. The company has been involved with OWT since 2019.

“This latest award reflects the unique value of our 3D geospatial data for military simulation use cases,” Susanne Hake, Maxar’s general manager for U.S. government, said in a statement. “Our data, derived from our Precision3D product, offers an extremely accurate 3D representation of Earth, including real textures and superior accuracy of 3 [meters] in all dimensions.”

Maxar was acquired by private equity firm Advent International in 2022 in an arrangement worth $6.4 billion. Following the buy, the company reorganized into two businesses: Maxar Intelligence and Maxar Space Infrastructure.

]]>The three-word statement was highlighted in bold letters at the opening of NATO’s March 4 briefing, on the occasion of the Polish-leg of the alliance’s largest military exercise since 1988.

But even amid the resolute and calm tone of officials in the room, there was a palpable sense of apprehension among reporters.

A core theme of the speeches presented by NATO representatives revolved around transparency, specifically in showcasing what the Steadfast Defender exercise — and its subsidiary drill Dragon, led by Poland — would involve. Yet many were wary of answering questions related to Russia or lessons learned from the war in Ukraine.

On several occasions, officials were pressed about whether they had concerns over revealing their plans to Russia through events such as these, or even the possibility the Kremlin could intercept operational details.

“Of course we are concerned, everyone is concerned,” Brig. Gen. Gunnar Bruegner, assistant chief of staff at NATO’s Supreme Headquarters Allied Powers Europe, told Defense News. “[We need] to make sure we are safeguarding the critical information, but it does not relieve us from the requirement of making these exercises happen.”

“It is quite a balance you need to keep; you cannot showcase everything,” he said.

During a March 4 news conference, Maj. Gen. Randolph Staudenraus, director of strategy and policy at NATO’s Allied Joint Force Command Brunssum, told reporters that while the alliance does protect its communications, “we are also really trying to be transparent.”

The fine line between accountability and information security is one that some NATO members have recently grappled with. A notable example is the leak of a German discussion about potentially providing Ukraine iwth Taurus missiles. Russia intercepted audio from the web conference between German Air Force officials.

Through this, Moscow was able to get its hands on information regarding the potential supply of cruise missiles to Ukraine as well as operational scenarios of how the war could play out.

Russian officials said last month that the country views Steadfast Defender as a threat.

When it comes to that training event, Bruegner said, details provided to the media during briefings are meant to illustrate the bigger picture, but only in broad terms.

“The plans themselves and the details in there will not be made available to everyone. What you’re seeing here are slides NATO has unclassified,” he explained.

He also noted that an objective of the exercises is to showcase the integration of capabilities, and not necessarily what NATO would do in a contested setting.

“We for sure would not fly banners on the amphibious devices in a contested exercise, which would have involved having an opponent on the other side of the eastern benches of the river and would’ve looked different [than what we saw in the Dragon drill],” Bruegner said.

]]>“Who goes there?” the deputy wrote. “The girls are so ugly.”

So goes the sordid wheeling and dealing that takes place behind the scenes in China’s hacking industry, as revealed in a highly unusual leak last month of internal documents from a private contractor linked to China’s government and police. China’s hacking industry, the documents reveal, suffers from shady business practices, disgruntlement over pay and work quality, and poor security protocols.

Private hacking contractors are companies that steal data from other countries to sell to the Chinese authorities. Over the past two decades, Chinese state security’s demand for overseas intelligence has soared, giving rise to a vast network of these private hackers-for-hire companies that have infiltrated hundreds of systems outside China.

Though the existence of these hacking contractors is an open secret in China, little was known about how they operate. But the leaked documents from a firm called I-Soon have pulled back the curtain, revealing a seedy, sprawling industry where corners are cut and rules are murky and poorly enforced in the quest to make money.

Leaked chat records show I-Soon executives wooing officials over lavish dinners and late night binge drinking. They collude with competitors to rig bidding for government contracts. They pay thousands of dollars in “introduction fees” to contacts who bring them lucrative projects. I-Soon has not commented on the documents.

Mei Danowski, a cybersecurity analyst who wrote about I-Soon on her blog, Natto Thoughts, said the documents show that China’s hackers for hire work much like any other industry in China.

“It is profit-driven,” Danowski said. “It is subject to China’s business culture — who you know, who you dine and wine with, and who you are friends with.”

Hacking styled as patriotism

China’s hacking industry rose from the country’s early hacker culture, first appearing in the 1990s as citizens bought computers and went online.

I-Soon’s founder and CEO, Wu Haibo, was among them. Wu was a member of China’s first hacktivist group, Green Army — a group known informally as the “Whampoa Academy” after a famed Chinese military school.

Wu and some other hackers distinguished themselves by declaring themselves “red hackers” — patriots who offered their services to the Chinese Communist Party, in contrast to the freewheeling, anarchist and anti-establishment ethos popular among many coders.

In 2010, Wu founded I-Soon in Shanghai. Interviews he gave to Chinese media depict a man determined to bolster his country’s hacking capacity to catch up with rivals. In one 2011 interview, Wu lamented that China still lagged far behind the United States: “There are many technology enthusiasts in China, but there are very few enlightened people.”

With the spread of the internet, China’s hacking-for-hire industry boomed, emphasizing espionage and intellectual property theft.

High-profile hacks by Chinese state agents, including one at the U.S. Office of Personnel Management where personal data on 22 million existing or prospective federal employees was stolen, got so serious that then-President Barack Obama personally complained to Chinese leader Xi Jinping. They agreed in 2015 to cut back on espionage.

For a couple of years, the intrusions subsided. But I-Soon and other private hacking outfits soon grew more active than ever, providing Chinese state security forces cover and deniability. I-Soon is “part of an ecosystem of contractors that has links to the Chinese patriotic hacking scene,” said John Hultquist, chief analyst of Google’s Mandiant cybersecurity unit.

These days, Chinese hackers are a formidable force.

In May 2023, Microsoft disclosed that a Chinese state-sponsored hacking group affiliated with China’s People’s Liberation Army called “Volt Typhoon” was targeting critical infrastructure such as telecommunications and ports in Guam, Hawaii, and elsewhere and could be laying the groundwork for disruption in the event of conflict.

Today, hackers such as those at I-Soon outnumber FBI cybersecurity staff by “at least 50 to one,” FBI director Christopher Wray said January at a conference in Munich.

Seedy state-led industry

Though I-Soon boasted about its hacking prowess in slick marketing PowerPoint presentations, the real business took place at hotpot parties, late night drinking sessions and poaching wars with competitors, leaked records show. A picture emerges of a company enmeshed in a seedy, sprawling industry that relies heavily on connections to get things done.

I-Soon leadership discussed buying gifts and which officials liked red wine. They swapped tips on who was a lightweight, and who could handle their liquor.

I-Soon executives paid “introduction fees” for lucrative projects, chat records show, including tens of thousands of RMB (thousands of dollars) to a man who landed them a 285,000 RMB ($40,000) contract with police in Hebei province. To sweeten the deal, I-Soon’s chief operating officer, Chen Cheng, suggested arranging the man a drinking and karaoke session with women.

“He likes to touch girls,” Chen wrote.

It wasn’t just officials they courted. Competitors, too, were targets of wooing over late night drinking sessions. Some were partners — subcontractors or collaborators on government projects. Others were hated rivals who constantly poached their staff. Often, they were both.

One, Chinese cybersecurity giant Qi Anxin, was especially loathed, despite being one of I-Soon’s key investors and business partners.

“Qi Anxin’s HR is a green tea bitch who seduces our young men everywhere and has no morals,” COO Chen wrote to Wu, the CEO, using a Chinese internet slur that refers to innocent-looking but ambitious young women.

I-Soon also has a complicated relationship with Chengdu 404, a competitor charged by the U.S. Department of Justice for hacking over 100 targets worldwide. They worked with 404 and drank with their executives but lagged on payments to the company and were eventually sued over a software development contract, Chinese court records show.

The source of the I-Soon documents is unclear, and executives and Chinese police are investigating. And though Beijing has repeatedly denied involvement in offensive hacking, the leak illustrates I-Soon and other hacking companies’ deep ties with the Chinese state.

For example, chat records show China’s Ministry of Public Security gave companies access to proofs of concept of so-called “zero days”, the industry term for a previously unknown software security hole. Zero days are prized because they can be exploited until detected. I-Soon company executives debated how to obtain them. They are regularly discovered at an annual Chinese state-sponsored hacking competition.

In other records, executives discussed sponsoring hacking competitions at Chinese universities to scout for new talent.

Many of I-Soon’s clients were police in cities across China, a leaked contract list showed. I-Soon scouted for databases they thought would sell well with officers, such as Vietnamese traffic data to the southeast province of Yunnan, or data on exiled Tibetans to the Tibetan regional government.

At times, I-Soon hacked on demand. One chat shows two parties discussing a potential “long-term client” interested in data from several government offices related to an unspecified “prime minister.”

A Chinese state body, the Chinese Academy of Sciences, also owns a small stake in I-Soon through a Tibetan investment fund, Chinese corporate records show.

I-Soon proclaimed their patriotism to win new business. Top executives discussed participating in China’s poverty alleviation scheme — one of Chinese leader Xi Jinping’s signature initiatives — to make connections. I-Soon CEO Wu suggested his COO become a member of Chengdu’s People’s Political Consultative Conference, a government advisory body comprised of scientists, entrepreneurs, and other prominent members of society. And in interviews with state media, Wu quoted Mencius, a Chinese philosopher, casting himself as a scholar concerned with China’s national interest.

But despite Wu’s professed patriotism, leaked chat records tell a more complicated story. They depict a competitive man motivated to get rich.

“You can’t be Lei Feng,” Wu wrote in private messages, referring to a long-dead Communist worker held up in propaganda for generations as a paragon of selflessness. “If you don’t make money, being famous is useless.”

Lax security, low pay

China’s booming hackers-for-hire industry has been hit by the country’s recent economic downturn, leading to thin profits, low pay and an exodus of talent, the leaked documents show.

I-Soon lost money and struggled with cash flow issues, falling behind on payments to subcontractors. In the past few years, the pandemic hit China’s economy, causing police to pull back on spending that hurt I-Soon’s bottom line. “The government has no money,” I-Soon’s COO wrote in 2020.

Staff are often poorly paid. In a salary document dated 2022, most staff on I-Soon’s safety evaluation and software development teams were paid just 5,600 yuan ($915) to 9,000 yuan ($1,267) a month, with only a handful receiving more than that. In the documents, I-Soon officials acknowledged the low pay and worried about the company’s reputation.

Low salaries and pay disparities caused employees to complain, chat records show. Leaked employee lists show most I-Soon staff held a degree from a vocational training school, not an undergraduate degree, suggesting lower levels of education and training. Sales staff reported that clients were dissatisfied with the quality of I-Soon data, making it difficult to collect payments.

I-Soon is a fraction of China’s hacking ecosystem. The country boasts world-class hackers, many employed by the Chinese military and other state institutions. But the company’s troubles reflect broader issues in China’s private hacking industry. The country’s cratering economy, Beijing’s tightening controls and the growing role of the state has led to an exodus of top hacking talent, four cybersecurity analysts and Chinese industry insiders told The Associated Press.

“China is no longer the country we used to know. A lot of highly skilled people have been leaving,” said one industry insider, declining to be named to speak on a sensitive topic. Under Xi, the person added, the growing role of the state in China’s technology industry has emphasized ideology over competence, impeded pay and made access to officials pivotal.

A major issue, people say, is that most Chinese officials lack the technical literacy to verify contractor claims. So hacking companies prioritize currying favor over delivering excellence.

In recent years, Beijing has heavily promoted China’s tech industry and the use of technology in government, part of a broader strategy to facilitate the country’s rise. But much of China’s data and cybersecurity work has been contracted out to smaller subcontractors with novice programmers, leading to poor digital practices and large leaks of data.

Despite the clandestine nature of I-Soon’s work, the company has surprisingly lax security protocols. I-Soon’s offices in Chengdu, for example, have minimal security and are open to the public, despite posters on the walls of its offices reminding employees that “to keep the country and the party’s secrets is every citizen’s required duty.” The leaked files show that top I-Soon executives communicated frequently on WeChat, which lacks end-to-end encryption.

The documents do show that staff are screened for political reliability. One metric, for example, shows that I-Soon checks whether staff have any relatives overseas, while another shows that employees are classified according to whether they are members of China’s ruling Communist Party.

Still, Danowski, the cybersecurity analyst, says many standards in China are often “just for show.” But at the end of the day, she added, it may not matter.

“It’s a little sloppy. The tools are not that impressive. But the Ministry of Public Security sees that you get the job done,” she said of I-Soon. “They will hire whoever can get the job done.”

___

Soo reported from Hong Kong. AP Technology Writer Frank Bajak in Boston contributed to this report.

]]>At the same time, the government sought to contain the domestic fallout from the leak and promised a quick investigation into how it was possible that a conversation by top German military personnel could be intercepted and published.

“It is absolutely clear that such claims that this conversation would prove, that Germany is preparing a war against Russia, that this is absurdly infamous Russian propaganda,” a spokesman for German Chancellor Olaf Scholz told reporters in Berlin.

Government spokesman Wolfgang Buechner said the leak was part of Russia’s “information war” against the West, and that the aim was to create discord within Germany.

The 38-minute recording features military officers discussing in German how Taurus long-range cruise missiles could be used by Kyiv against invading Russian forces. While German authorities haven’t questioned the authenticity of the recording, Scholz said a week ago that delivering these weapons to Ukraine isn’t an option — and that he doesn’t want Germany to be drawn into the war directly.

Russia’s foreign ministry, however, on Monday threatened Germany with “dire consequences” in connection with the leak, though it didn’t elaborate.

“If nothing is done, and the German people do not stop this, then there will be dire consequences first and foremost for Germany itself,” foreign ministry spokesperson Maria Zakharova said.

Relations between the two countries have continuously deteriorated since Russia invaded Ukraine two years ago.

The audio leak was posted Friday by Margarita Simonyan, chief editor of Russian state-funded television channel RT, on social media on Friday, the same day that late opposition politician Alexei Navalny was laid to rest after his still-unexplained death two weeks ago in an Arctic penal colony. It also surfaced just weeks before Russia’s presidential election.

In the leaked audio, four officers, including the head of Germany’s Air Force, Ingo Gerhartz, can be heard discussing deployment scenarios for Taurus missiles in Ukraine before a meeting with Defense Minister Boris Pistorius, German news agency dpa reported.

The officers then states that early delivery and rapid deployment of Taurus long-range cruise missiles would only be possible with the participation of German soldiers — and that training Ukrainian soldiers to deploy the Taurus on their own would be possible, but would take months.

The recording also shows the German government hasn’t given its OK for the delivery of the cruise missiles sought by Ukraine, dpa reported.

There has been a debate in Germany on whether to supply the missiles to Ukraine as Kyiv faces battlefield setbacks, and while military aid from the United States is held up in Congress. Germany is now the second-biggest supplier of military aid to Ukraine after the U.S., and is further stepping up its support this year.

Scholz’s insistence last week on not giving Taurus missiles to Ukraine came after Germany stalled for months on the country’s desire for Taurus missiles, which have a range of up to 500 kilometers (310 miles) and could in theory be used against targets far inside Russian territory.

On Monday, the chancellor reiterated his stance during a visit at a school in Sindelfingen in southwestern Germany.

“I’m the chancellor and that’s why it’s valid,” he said regarding his “no” to the delivery of Taurus missiles, dpa reported.

Also on Monday, Germany’s ambassador visited Russia’s foreign ministry in Moscow. While Russia media reported that Ambassador Alexander Graf Lambsdorff had been summoned by the foreign ministry, the German government said his visit had been planned well before the audio was published.

Germany’s defense ministry tried to downplay the significance of the officers’ conversation in the leak — saying it was merely an “exchange of ideas” before a meeting with the defense minister.

The ministry said it was investigating how it was possible that a conversation by top German military personnel could be intercepted and leaked by the Russians. It promised to report of its findings. Several German media have reported that the officers were in a WebEx meeting when they were taped.

Buechner, the spokesman of the chancellor, said that the German government would also look into how “we can better counter targeted disinformation, especially from Russia.”

The Kremlin on Monday said that it looked forward to the results of the German government’s investigation.

“Mr. Scholz said that a fast, complete and effective investigation would be carried out. We hope that that we will be able to find out the outcome of that investigation,” spokesperson Dmitry Peskov said.

Katie Marie Davies reported from Manchester, United Kingdom.

]]>Adm. Samuel Paparo, recognizing the tune, smiled as he grabbed the microphone. He then quipped about past lives and “a lot of illegal things” that took place as he came of age in the 1980s.

Times have since changed, and some have found religion in the military, Paparo said. And, much like the times, the demands of a modern-day warrior with a mean, mean stride are constantly evolving.

“We are in the middle of another epochal change,” he said. “And that is the dawn — and I do mean the dawn — of the information age.”

As the U.S. Defense Department prepares for potential confrontations with Russia or China and juggles counterterrorism operations in the Greater Middle East and Africa, it’s emphasizing data: how it’s collected; how it’s shared; and how it can be weaponized. But by some of the department’s own measures, including the 2023 Strategy for Operations in the Information Environment, it is falling behind.

State actors and extremist groups alike have long exploited the information ecosystem in an attempt to distort or degrade U.S. standing. Operating online and below the threshold of armed conflict ducks the consequences of physical battles, where personnel levels, accumulated stockpiles and technology budgets can make all the difference.

The Navy in November published a 14-page document laying out how it plans to get up to speed, arguing neither ship nor torpedo alone will strike the decisive blow in future fights. Rather, it stated, a marriage of traditional munitions and exquisite software will win the day.

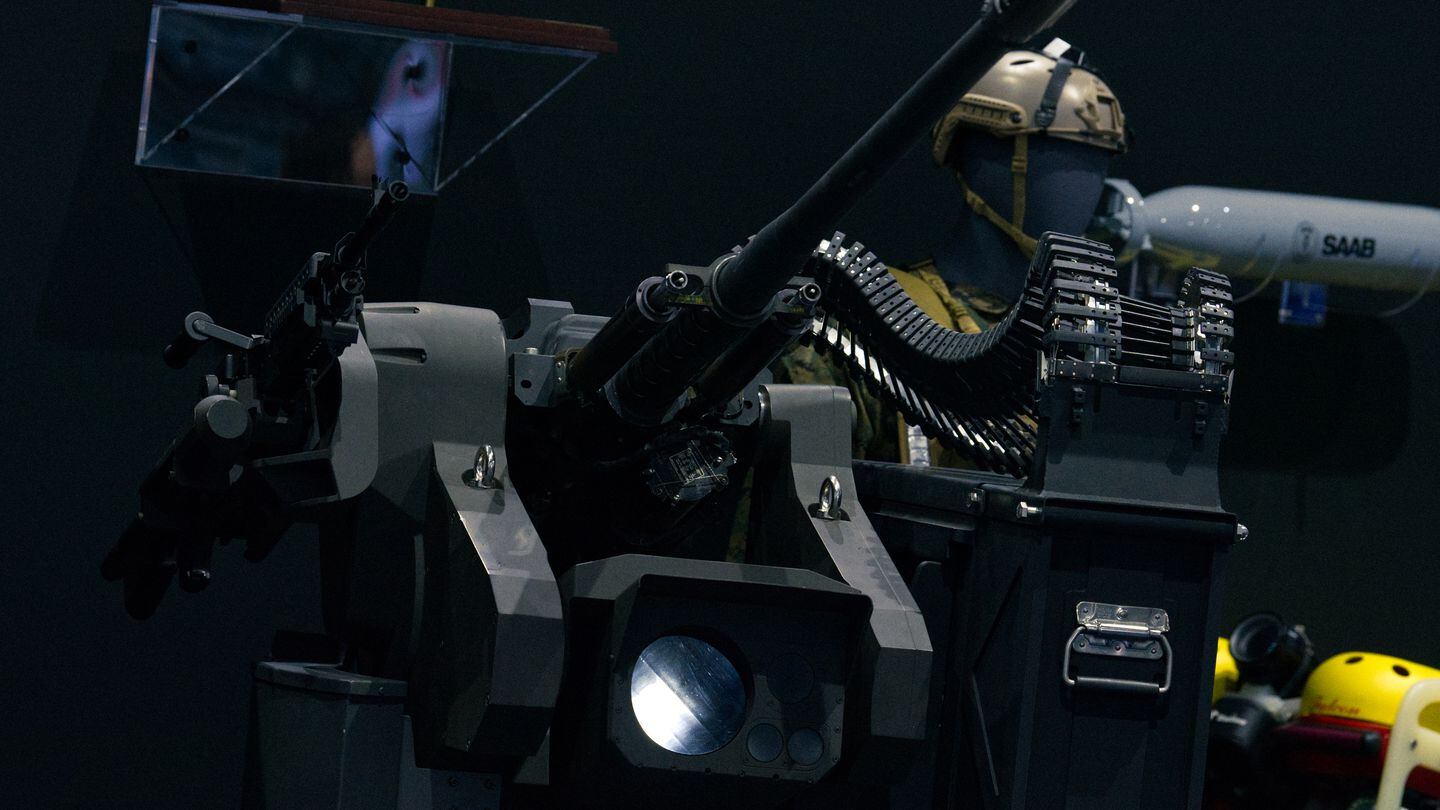

The thinking was prominent at the AFCEA- and U.S. Naval Institute-hosted West confab, where Paparo and other leaders spoke, and where some of the world’s largest defense contractors mingled and hawked their wares. Signs promised secure connectivity. Other screens advertised warrens of computerized pipes and tubes through which findings could flow.

“Who competes best in this — who adapts better, who’s better able to combine data, computing power and [artificial intelligence], and who can win the first battle, likely in space, cyber and the information domain — shall prevail,” Paparo said.

Subs and simulation

The U.S. has sought to invigorate its approach to information warfare, a persuasive brew of public outreach, offensive and defensive electronic capabilities, and cyber operations that can confer advantages before, during and after major events. Rapidly deployable teams of information forces that can shape public perceptions are a must, the Defense Department has said, as is a healthy workforce comprising military and civilian experts.

The Navy in 2022 embedded information warfare specialists aboard submarines to study how their expertise may aid underwater operations. That pilot program is now advancing into a second phase, with information professional officers and cryptologic technicians joining two East Coast subs, the Delaware and the California.

Years prior, the service made information warfare commanders fixtures of carrier strike groups.

“This is the first and the most decisive battle,” said Paparo, who previously told Congress that Indo-Pacific Command, his future post, is capable of wielding deception to alter attitudes and behaviors. “The information age will not necessarily replace some of the more timeless elements of naval combat, maneuver and fires, but will in fact augment them.”

Del Toro asks Navy contractors to consider taxpayers over shareholders